What is Vector Databases: Powering the Next Wave of AI-Driven Experiences with AWS

In today’s AI-first world, the ability to understand and search unstructured data - like text, images, and audio - is transforming how appli...

Read MoreIn today’s AI-first world, the ability to understand and search unstructured data - like text, images, and audio - is transforming how appli...

Read MoreAWS Glue is a powerful data engineering platform when designed, tuned, and governed correctly.But We are treating it as a simple ETL utility...

Read More

Dear all my DBA Friends its time to upgrade to 8.4 . Yes We are getting notification from all the cloud partners as well on the customer sid...

Read More

If we grow bigger in the business , seamlessly our customer and transaction data also increases . In the meantime performance needs to consi...

Read More

Wow !!! If suppose on the migration projects we need to more stuffs and things to convert when coming to procedures , functions an...

Read MoreOracle Audit Log : Oracle Audit Log refers to the feature in Oracle Database that records and stores information about various database act...

Read MoreReplicate with customized parameter group .. https://medium.com/@datablogs/cross-region-rds-replication-with-customized-parameter-group-fc29...

Read MoreIts Easy to achieve it in easy method , https://datablogs.medium.com/ora-01940-cannot-drop-a-user-that-is-currently-connected-and-kill-inact...

Read More

If you are newbie to AWS Glue its really difficult to run the Crawlers without these failures , Below are basic steps you need to make sure ...

Read More

Always customer prefers cost less solutions to run the business . To help them their business and the requirements we also needs to provide ...

Read More

We love MongoDB for extraordinary features as per business perspective Lets come to our Blog Discussion , Only in PaaS Environments we have...

Read More

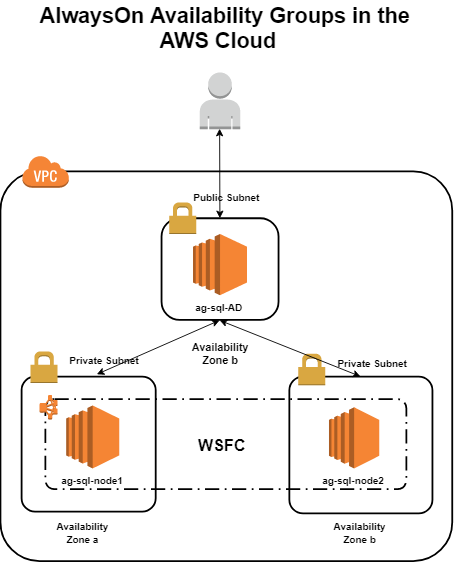

Microsoft gives HA features like a charm . Lower to higher deployment costs its giving many features as per business requirements . Replicat...

Read More